Load Balance Anything, Anywhere with ngrok (Sponsored)

Today’s cloud-native workloads often span multiple clusters, container runtimes, net works and even clouds, but existing load balancers are limited to balancing only within a single cluster or a single network.

We designed Endpoint Pools so that you can balance across endpoints that are running on different networks, regions and cloud providers. You can even balance across cloud infrastructure and your own hardware. Host one replica in your office's server closet, another on a Droplet in Frankfurt, and a half-dozen in us-east-1.

Thank you ngrok for sponsoring this week’s newsletter. Now back to newsletter.

Hi—this is Kisan from The Cloud Handbook. Welcome to this week’s issue of The Cloud Handbook! If you're working as a Cloud Engineer, Cloud Architect, SRE, or DevOps Engineer—or aspiring to these roles—this comprehensive guide covers the important system design concepts that will upgrade your cloud skill.

System design isn't just about drawing boxes and arrows. It's about understanding how to build scalable, reliable, and maintainable systems that can handle real-world challenges. In cloud environments, these principles become even more critical as you leverage managed services, distributed architectures, and global infrastructure.

Here are 20 concepts you should know as a cloud builder.

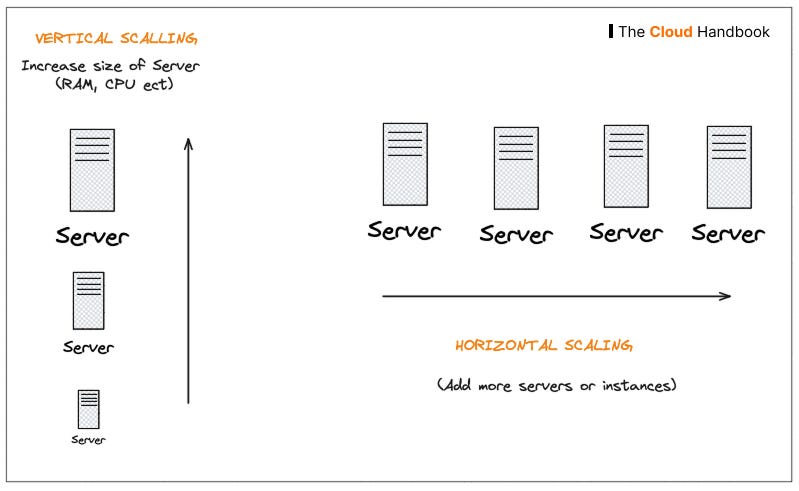

Scalability

Understanding scalability means knowing when to scale horizontally (adding more servers) versus vertically (upgrading existing resources). In cloud environments, horizontal scaling often provides better resilience and cost efficiency. Auto-scaling policies in AWS EC2, Google Cloud Compute Engine, or Azure Virtual Machine Scale sets automatically adjust capacity based on demand patterns.

Consider Netflix's approach: they use horizontal scaling across thousands of microservices rather than building massive monolithic applications. This allows them to handle millions of concurrent users while maintaining service availability even when individual components fail.

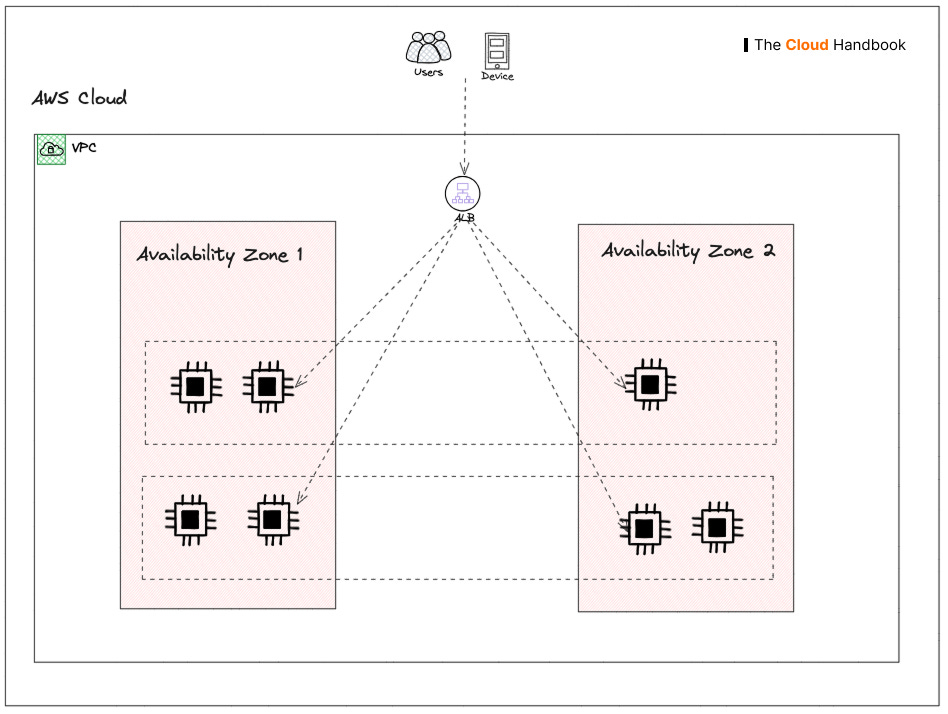

Availability

Availability goes beyond just uptime—it's about designing systems that handle failures. This involves implementing redundancy across multiple availability zones, setting up automated failover mechanisms, and designing circuit breakers that prevent cascading failures.

The key insight is that failures will happen. The question isn't if, but when. Systems like Amazon S3 achieve 99.999999999% (11 9's) durability by storing data across multiple facilities and using sophisticated error correction techniques.

Reliability

Reliability in software engineering is all about building systems that keep working when things go wrong.

Think of reliability like a well-built bridge. A reliable bridge doesn't just work on sunny days - it keeps functioning during storms, heavy traffic, and even when some parts need maintenance. Similarly, reliable cloud software continues serving users even when servers crash, networks hiccup, or traffic suddenly spikes.

Key aspects of reliability include:

Fault tolerance - Your system gracefully handles failures instead of completely breaking down. If one server dies, others pick up the work automatically.

Consistent performance - Users get the same quality experience whether it's 2 AM or during peak hours when millions are online.

Quick recovery - When something does break, the system either fixes itself or gets fixed fast with minimal user impact.

Predictable behavior - The software does what users expect it to do, every time they use it.

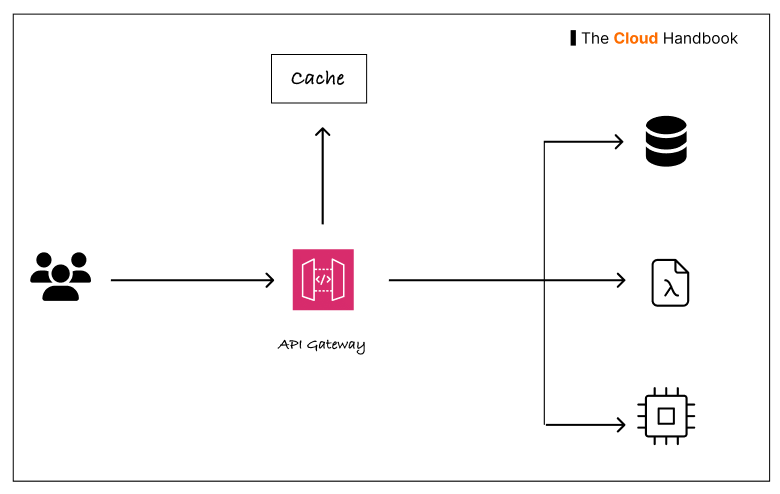

Caching

Caching is a technique used in computing to store frequently accessed data in a temporary storage (called a cache) so that future requests for that data can be served faster.

💡 Why Cache?

Speed: Fetching from cache is faster than fetching from the original source (like a database or server).

Efficiency: Reduces load on servers and databases.

Better user experience: Faster apps and websites.

Service like Amazon API Gateway provides caching feature and can be easily set up. You can learn more about this here.

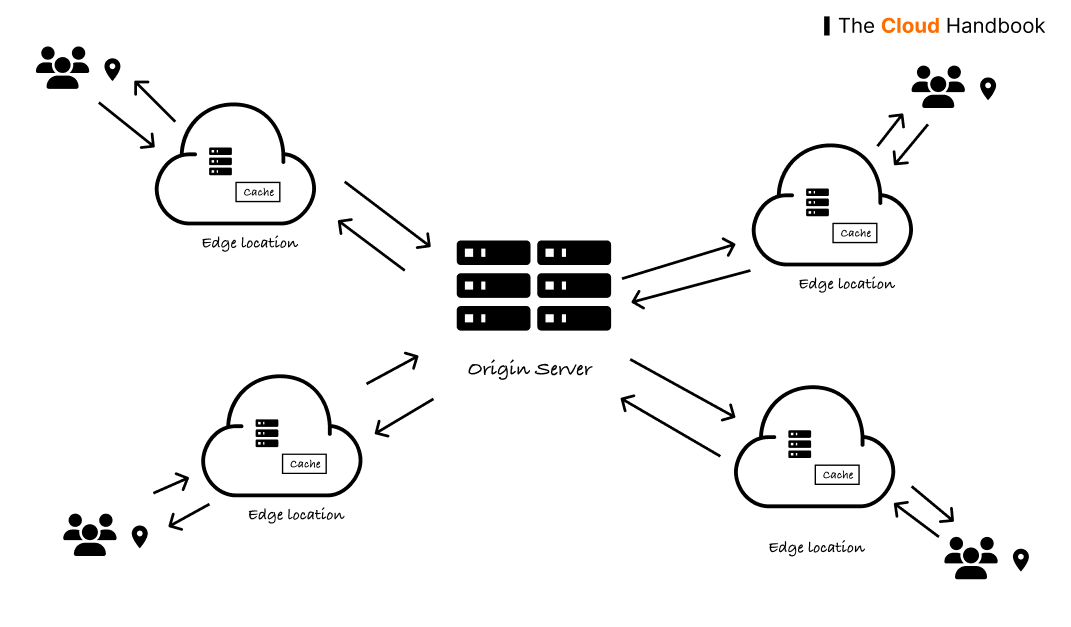

CDNs

A CDN (Content Delivery Network) is a network of servers distributed across different geographic locations that helps deliver content—like websites, images, videos, scripts—faster and more reliably to users.

How It Works:

When someone visits a website that uses a CDN:

Instead of loading everything from the origin server (which may be far away), the content is served from the nearest CDN server.

This reduces latency, speeds up loading time, and improves the user experience.

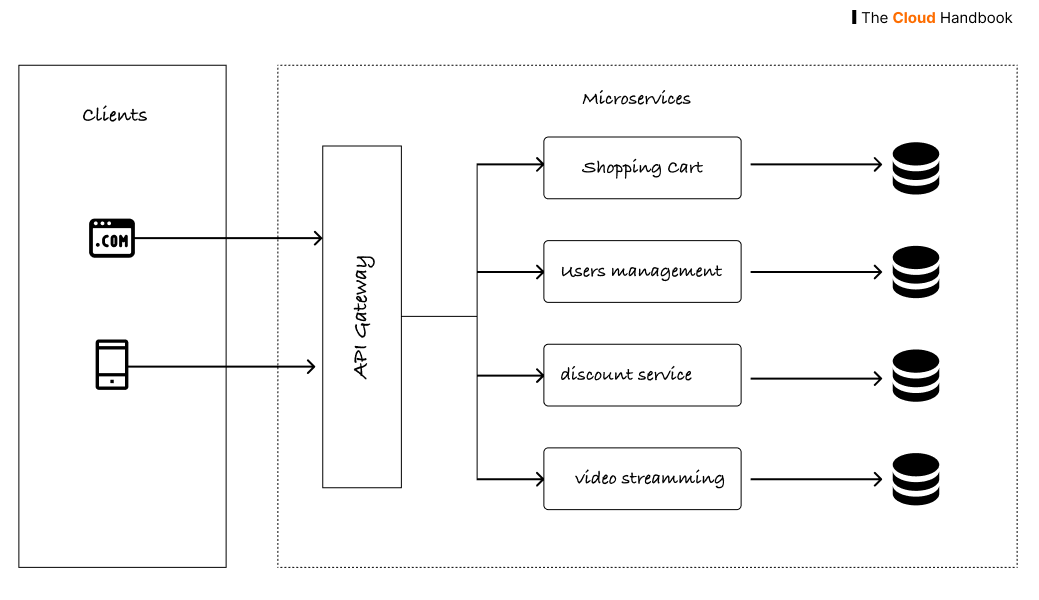

Microservices

Microservices is like breaking down a big, complicated machine into many small, specialized parts that work together.

Instead of building one massive application that does everything, you create many tiny applications that each do one specific job really well. For example:

One service handles user logins

Another manages shopping carts

Another processes payments

Another sends emails

The choice between microservices and monolithic architecture depends on team size, complexity, and organizational maturity. Microservices offer independent scaling and deployment but introduce complexity in service communication and data consistency. Start with a well-structured monolith and evolve to microservices as needed.

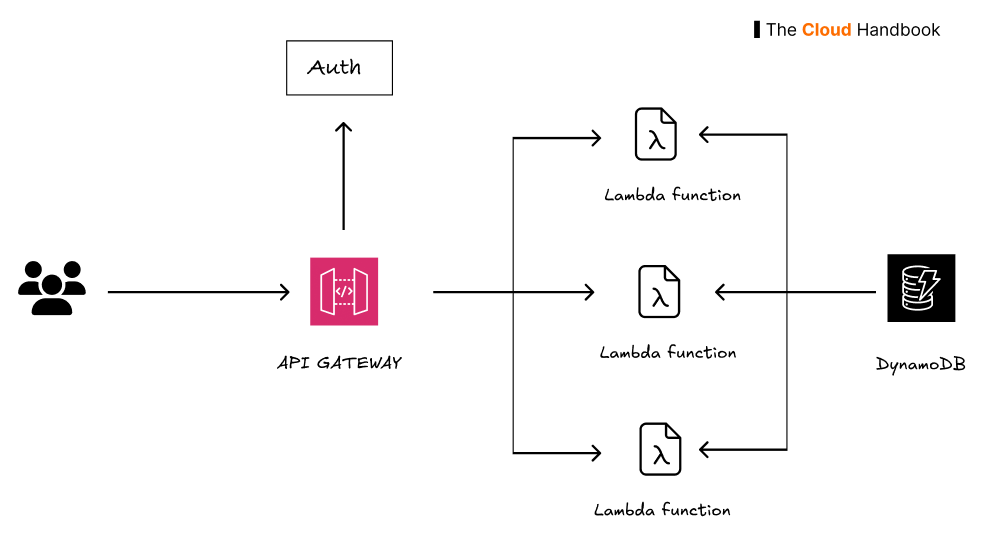

Serverless Architecture

Serverless architecture is like using a taxi service instead of owning a car - you only pay when you actually need to go somewhere, and someone else handles all the maintenance. In serverless, you write your code as small functions that automatically run when triggered (like when a user clicks a button or uploads a file), but you don't manage any servers yourself.

Serverless platforms like AWS Lambda, Google Cloud Functions, and Azure Functions offers this services. However, they come with constraints around execution time, cold starts, and vendor lock-in that must be carefully considered.

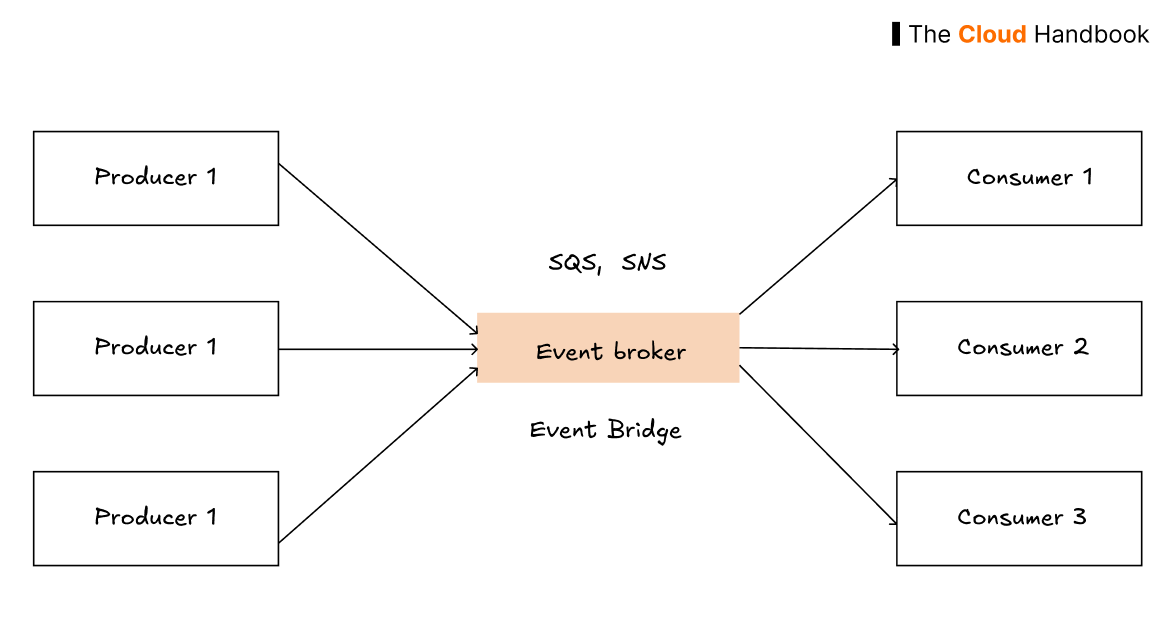

Event-Driven Architecture

Event-driven systems use events to trigger actions across decoupled services. This pattern is particularly powerful in cloud environments where services need to respond to changes in real-time without tight coupling.

Load Balancers

Application Load Balancers (ALB) operate at Layer 7 and can route based on content, while Network Load Balancers (NLB) work at Layer 4 for ultra-low latency. Understanding these differences helps you choose the right tool for your traffic patterns.

Message Queues

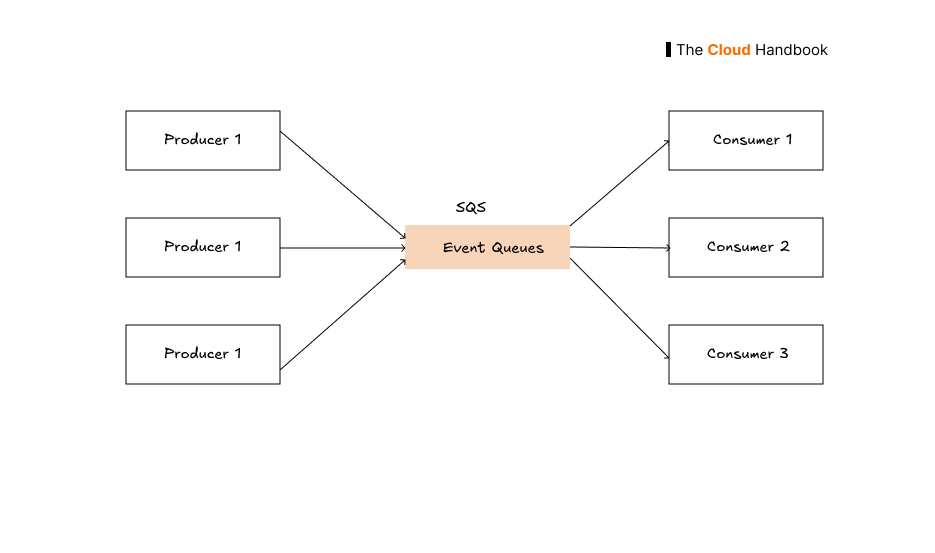

Message queues like Amazon SQS, Apache Kafka, and Google Pub/Sub enable asynchronous communication between services. This decoupling improves system resilience and allows independent scaling of producers and consumers.

Virtual Private Cloud (VPC) Design

Understanding VPC networking is fundamental to cloud architecture. Design private subnets for sensitive workloads, public subnets for internet-facing resources, and use security groups and network ACLs to control traffic flow.

Database Selection

Choosing between SQL databases (RDS, Aurora) and NoSQL options (DynamoDB, MongoDB, Firestore) depends on your consistency requirements, query patterns, and scale needs. Understanding CAP theorem helps navigate the trade-offs between consistency, availability, and partition tolerance.

Infrastructure as Code (IaC)

Tools like Terraform, CloudFormation, and Pulumi enable version-controlled, repeatable infrastructure deployments. This approach reduces configuration drift and makes infrastructure changes auditable and reversible.

Deployment Patterns

As a cloud engineer, you have to know different deployment patterns. Blue-green deployments minimize downtime by maintaining two identical environments. Canary releases gradually roll out changes to a subset of users, allowing you to validate changes before full deployment. Rolling updates provide a middle ground, updating instances gradually without maintaining duplicate infrastructure.

12-Factor App

The 12-Factor App principles provide a blueprint for building cloud-native applications. These include treating configuration as environment variables, explicitly declaring dependencies, and designing stateless processes. Following these principles makes applications more portable and scalable in cloud environments.

Hybrid Cloud

Many organizations need to connect on-premises infrastructure with cloud resources. Technologies like AWS Direct Connect, Azure ExpressRoute, and Google Cloud Interconnect provide dedicated network connections for consistent performance and enhanced security.

Observability

Observability is super important in cloud environment as there will be a lot components in your systems. Effective observability requires logs, metrics, and traces. CloudWatch Logs, Prometheus metrics, and distributed tracing with tools like AWS X-Ray or OpenTelemetry provide the visibility needed to understand system behavior in production.

Database Sharding and Partitioning

As data grows, partitioning becomes crucial. Horizontal partitioning (sharding) distributes data across multiple databases, while vertical partitioning separates tables by feature. Consistent hashing helps distribute data evenly and handle node additions or removals gracefully.

Global Distribution

For global applications, consider read replicas for databases, CDN distribution for static content, and multi-region deployment for critical services. Tools like AWS Global Accelerator can improve performance by routing traffic through AWS's global network.

🎯 Build Real world projects

The best way to learn these concepts is through hands-on practice. Try designing systems for common scenarios such as:

URL Shortener (like bit.ly): Focus on high read volume, global distribution, and analytics collection.

File Storage Service (like Dropbox): Consider file chunking, deduplication, synchronization, and conflict resolution.

Build Notification System: Design for different delivery channels (email, SMS, push), handling failures, and ensuring message ordering.

Real-time Chat Application: Focus on WebSocket connections, message ordering, presence indicators, and horizontal scaling.

Streaming Platform: Consider video encoding, CDN distribution, recommendation systems, and handling peak traffic.

Conclusion

System design for cloud roles requires understanding both fundamental principles and cloud-specific patterns. The key is balancing theoretical knowledge with practical experience. Start with well-architected frameworks from cloud providers (AWS Well-Architected Framework, Azure Architecture Center, Google Cloud Architecture Framework) and gradually build complexity as you gain experience.

Over to you, what other concepts would you add?

Liked this article? Feel free to drop ❤️ and restack with your friends.

If you have any feedbacks or questions 💬, comment below. See you in the next one.

Get in Touch

You can find me on Twitter, Linkedin.

If you want to work with me or want to sponsor the newsletter, please email me at kisan.codes@gmail.com.

What topics would you like to see covered in future issues of The Cloud Handbook Newsletter? Please share your thoughts and questions.