🐳 How does Docker Work, Dockerize sample React Application, and Multi-stage Docker build. 🚀

In our previous container series, we looked at how containerization has revolutionized how we deploy web apps by creating a lightweight, portable, and scalable application bundle as a container and making deployment easy, reproducible, and efficient. We also looked at how containers work under the hood.

This week, we discuss how docker works under the hood, dockerize the sample react application and learn multi-stage build in docker.

Before we get started, let’s see what cloud services are you using at work for deploying containers.

How does Docker work?

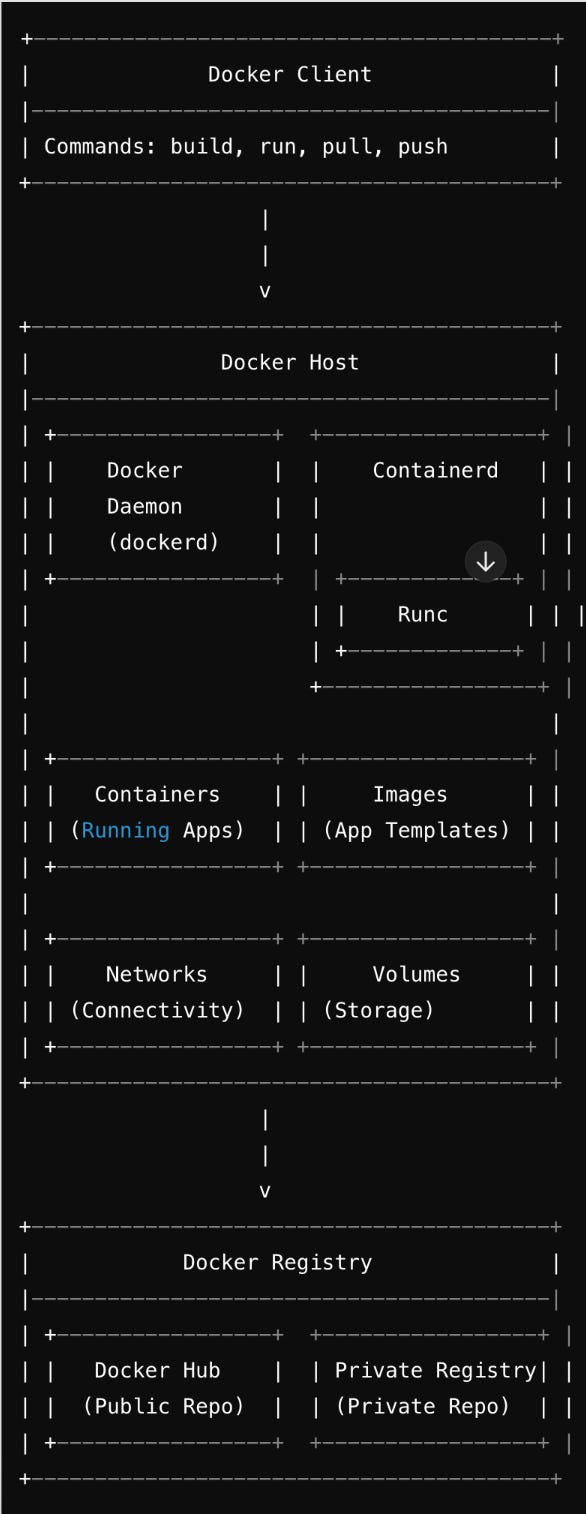

Three components of Docker.

Docker uses Client-Server Architecture.

Docker Client

The interface that users interact with docker host. It sends commands to the Docker Daemon (part of the Docker Host) to execute. Commands like docker build, docker run, and docker pull are all issued through the Docker Client.

Docker Host

Docker Host does all the heavy lifting of building and packaging containers.

Components:

Docker Daemon (dockerd): The core service that runs on the host machine.

Containerd: A high-level container runtime daemon that manages the complete container lifecycle.

Runc: The low-level container runtime that actually runs the containers.

Containers: Instances of Docker images running as applications.

Images: Read-only templates used to create containers.

Networks: Configurations that allow Docker containers to communicate with each other.

Volumes: Persistent storage mechanisms for Docker containers.

Registry

A repository for Docker images. Just like GitHub but for your container images.

Stores Docker images. Docker Hub is a public registry provided by Docker, Inc., but you can also set up your own private registry. AWS Cloud offers services like Elastic container Registry (ECR) to host your images.

Basic Docker Commands

CLI Commands: Users issue commands through the Docker Client using the Docker CLI.

docker build: Creates an image from a Dockerfile.docker pull: Downloads an image from a registry.docker run: Runs a container from an image.

These are some of the hight level of understanding Docker.

Now let’s do hands-on with docker.

Project Setup

First let's use vite to create a react application

npm create vite@latestcd react-container

npm install

npm run devWe now have a simple React app that looks like this. To keep things simple we will keep the react app as is and focus on creating a docker container.

Before creating the container, let's understand what we need to do in order to deploy our app without docker and later map each step to docker file to create a container.

create a virtual machine

Install

nodeon the machinecopy the source code into the machine(manually or using git)

install the dependencies for the project

build the app

install nginx(or any webserver)

configure nginx to serve our app

Create the Dockerfile

Unoptimized Dockerfile

Create a nginx.conf file

worker_processes auto;

events { worker_connections 1024; }

http {

server {

listen 80;

root /usr/share/nginx/html;

include /etc/nginx/mime.types;

error_page 404 /usr/share/nginx/html;

}

}Create Dockerfile.

# Use an official Node runtime as the base image

FROM node:14-alpine

# Set the working directory inside the container

WORKDIR /app

# Copy package.json and package-lock.json to the working directory

COPY package*.json ./

# Install dependencies

RUN npm install

# Copy the rest of the application code to the working directory

COPY . .

# Build the React application

RUN npm run build

# Install Nginx

RUN apk add --no-cache nginx

# Copy the build output to the Nginx html directory

RUN cp -r /app/dist /usr/share/nginx/html

# Copy the Nginx configuration file

COPY nginx.conf /etc/nginx/nginx.conf

# Expose port 80

EXPOSE 80

# Command to run Nginx

CMD ["nginx", "-g", "daemon off;"]

Let's understand what we did in the Dockerfile step by step.

FROM node:14-alpineInstead of creating creating a virtual machine and installing nodejs manually, we will directly use an image with nodejs already installed and build our container on top of that.

COPY package*.json ./

RUN npm install

COPY . .Here we are copying package.json, package-lock.json from the host machine into the container.

Then we installed the dependencies and copy the current working directory from the host to the working directory of the container which we specified in WORKDIR /app

Earlier when we listed the steps we needed to follow, we first copied to code, then we installed the dependencies, but in the docker file, we first copied package.json, installed dependencies, and then we copied the code inside the container.

The reason for that is docker follows a layered approach to managing files(unified file system). Each instruction in the Dockerfile creates a layer and any changes in the previous layer invalidate the subsequent layers causing them to be rebuilt. This will make the build process inefficient.

If we had copied our code first then installed dependencies, any change in the code will re-install the dependencies. With this approach, dependencies will only be installed when we change the package.json file.

# Build the React application

RUN npm run build

# Install Nginx

RUN apk add --no-cache nginx

# Remove the default Nginx configuration file

RUN rm /etc/nginx/conf.d/default.conf

# Copy the custom Nginx configuration file

COPY nginx.conf /etc/nginx/conf.d/

# Copy the build output to the Nginx html directory

RUN cp -r /app/build/* /usr/share/nginx/html/

# Expose port 80

EXPOSE 80

# Command to run Nginx

CMD ["nginx", "-g", "daemon off;"]The rest of the Dockerfile will build the project, install and configure webserver, copy the build to the specified webserver and run the webserver.

Now, let's build the container.

docker build -t react-demo .We will name the image react-demo and the build context to be the current working directory.

Run the container with the following command the you should see result in localhost:3000

docker run -d -p 3000:80 react-demoMultistage docker build

Although we have successfully built a docker image, there are several issues with this dockerfile. If you think about it, when we build our react app, it is bundled into static files. If we just copy the static files into a webserver, our react app can still run without the need of installing nodejs and it's dependencies. Keeping nodejs and the dependencies in the production build of our image will make the docker image large and make deployment slower.

If you run docker images you will be able to see the size of docker image

Now let's use multi-stage build and reduce the size of the image. In multi-staged build, we divide the docker file into several stages and copy only the necessary files from one to other stages and only those files copied or generated to the file stage will remain in the final image.

Optimized Dockerfile

# Use an official Node runtime as the base image

FROM node:14-alpine as build

# Set the working directory inside the container

WORKDIR /app

# Copy package.json and package-lock.json to the working directory

COPY package*.json ./

# Install dependencies

RUN npm install

# Copy the rest of the application code to the working directory

COPY . .

# Build the React application

RUN npm run build

# Stage 2: Production environment

FROM nginx:alpine as prod

# Copy built artifacts from the build environment

COPY --from=build /app/dist /usr/share/nginx/html

# Copy the Nginx configuration file

COPY nginx.conf /etc/nginx/nginx.conf

# Expose port 80

EXPOSE 80

# Command to run Nginx

CMD ["nginx", "-g", "daemon off;"]Here we're marking different stages in the build file

FROM node:14-alpine as buildand copy only the dist folder from the build stage into the webserver

COPY --from=build /app/dist /usr/share/nginx/htmlNow, let's build the docker image with the name react-demo-v2 and run the container

docker build -t react-demo .

docker run -d -p 3001:80 react-demo-v2If you visit localhost:3001 you should the same result.

The final image size after we used multi-stage build is much smaller.

Final Thought

We will continue to explore deeper into container technologies in coming days as it is one of the favorite topics in tech. Subscribe for more.

Over to you, how are you using docker at work? Let us know in the comment.

Before reading this post, i unable to understand what's the difference between vm and containers, but now thanks to you I know why they both are two different things

Thanks for sharing!